Python's GIL and atomic reference counting

I love Python because it's an incredibly fun, expressive and productive language. However, it's often criticised for being slow. I think the correct answer to that is two-fold:

- Use an alternative Python implementation for impressive speed-ups. For example, PyPy is on average 7 times faster than the standard CPython.

- Not all parts of a program have to be blazingly fast. Use Python for all non-performance-critical areas, such as the UI and database access, and drop to C or C++ only when it's required for for CPU intensive tasks. This is easy to achieve with language binding generators such as CFFI, SWIG or SIP.

A further performance-related issue is Python's Global Interpreter Lock (GIL), which ensures that only one Python thread can run at a single time. This is a bit problematic, because it affects PyPy as well, unless you want to use its experimental software transactional memory support.

Why is this such a big deal? With the rise of multi-core processors,

multithreading is becoming more important as well. This not only affects

performance on large servers, it impacts desktop programs and is

crucial for battery life on mobile phones (race to idle). Further, other

programming languages make multi-threaded programming easier and easier. C,

C++, and Java have all moved to a common memory model for multithreading. C++

has gained std::atomic, futures, and first-class thread

support.

C# has async and await, which is proposed for

inclusion in C++ as well. This trend will only accelerate in the future.

With this in mind, I decided to investigate the CPython GIL. Previous proposals for its removal have failed, but I thought it's worth a look — especially since I couldn't find any recent attempts.

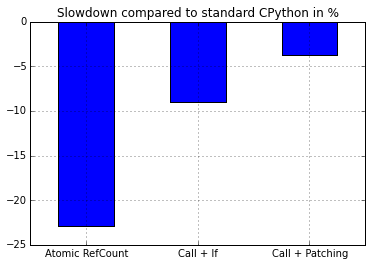

The results were not encouraging. Changing the reference count used by

Python objects from a normal int to an atomic type resulted in a

~23% slowdown

on my machine. This is without actually changing any of the

locking. This penalty could be moderated for single-threaded programs by only

using atomic instructions once a second thread is started. This requires a

function call and an if statement to check whether to use atomics or not in

the refcount hot path. Doing this still results in an 11% slowdown in the

single-threaded case. If hot-patching was used instead of the if, a 6%

slowdown remained.

The last result looks promising, but is deceiving. Hot-patching would rely

on having a single function to patch. Alas, the compiler decided to mostly

inline the Py_INCREF/Py_DECREF function calls. Disabling inlining of these

functions gives a 16% slowdown, which is worse than the "call + if" method.

Furthermore, hot-patching is probably not something that could be merged in

CPython anyway.

So what's the conclusion? Maybe turning Py_INCREF and Py_DECREF into

functions and living with the 11% slowdown of the single-threaded case

would be sell-able, if compelling speed-ups of multithreaded workloads could

be shown. It should be possible to convert one module at a time from the GIL to

fine-grained locking, but performance increases would only be expected once at

least a couple of core modules are converted. That would take a substantial

amount of work, especially given the high risk that the resulting patches

wouldn't be accepted upstream.

Where does this leave Python as a language in the multi-threaded world? I'm not sure. Since PyPy is already the solution to Python's performance issue, perhaps it can solve the concurrency problem as well with its software transactional memory mode.

PS: My profiling showed that the reference counting in

Python (Py_INCREF() and Py_DECREF()) takes up to about 5–10% of the

execution time of benchmarks (not including actual object destruction), crazy!

Comments